Key Takeaways

- Anthropic announced on February 4, 2026 that Claude will remain ad-free, with no sponsored links inside chats and no advertiser influence on responses.

- The company argues that AI conversations are different from search or social feeds because people often share more context, including sensitive details.

- Anthropic ties this policy to its constitution, where “genuine helpfulness” is a core principle for model behavior.

- Independent research points to both promise and risk when people rely on AI for emotional support, reinforcing the need for caution and clear boundaries.

- Access is still a stated goal: Anthropic says it is expanding education efforts globally, including a program with educators across 63 countries.

If we want a practical summary first, jump to Practical checklist for stronger AI conversations.

If trust is our main concern, see Trust and safety when AI conversations get personal.

AI conversations need a clear place to think

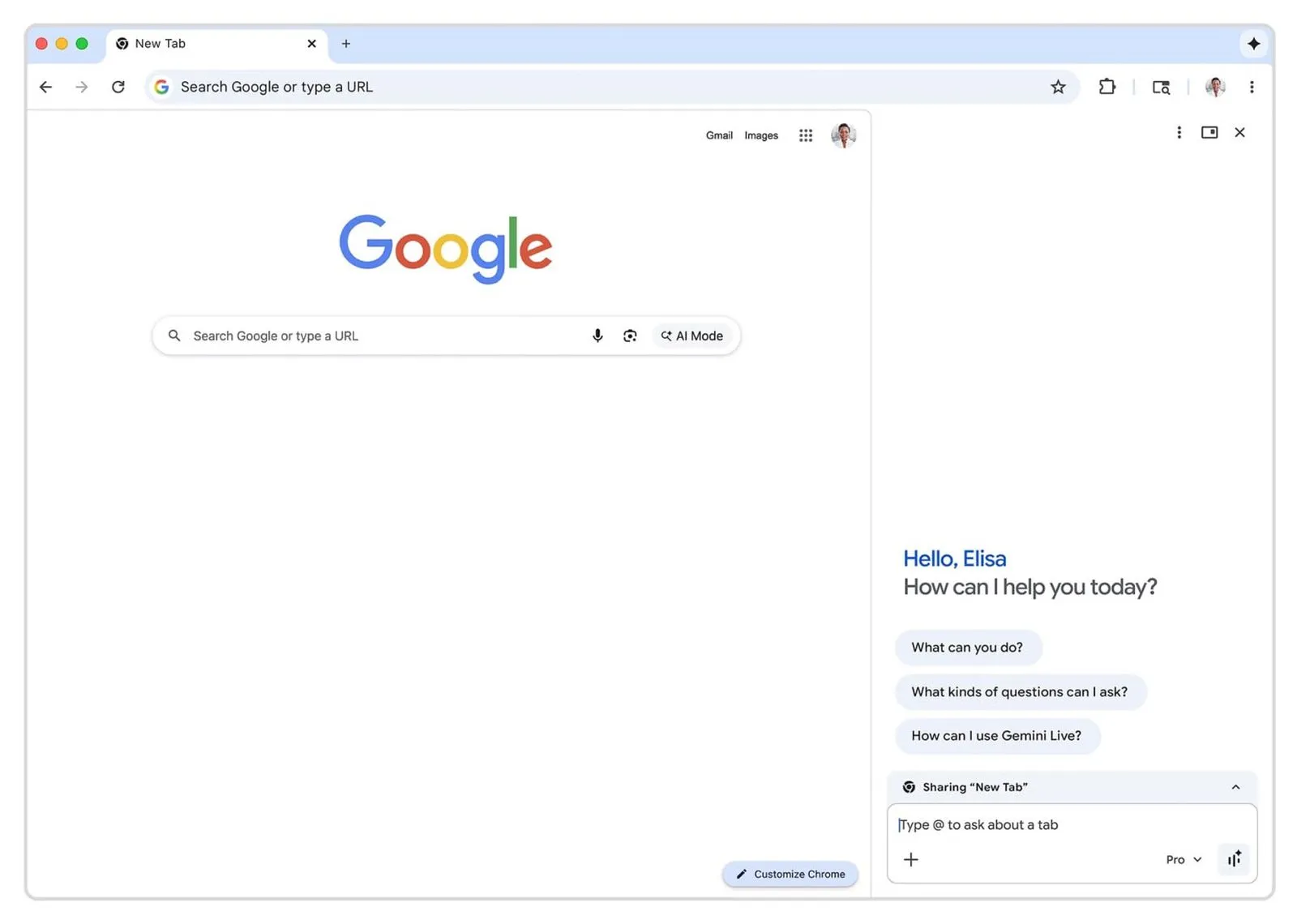

When we look at how digital products usually make money, ads are common. But Anthropic’s announcement takes a different direction: it says Claude should be a clean workspace for thought, not an ad surface. In simple terms, the company is saying that AI conversations should prioritize user intent over commercial pressure.

This matters because AI conversations are not just short keyword searches. They are often open-ended, and many people use them to reason through work tasks, personal decisions, and difficult questions.1 If ad incentives enter that flow, we risk blurring the line between advice and promotion. That can weaken trust quickly.

What Anthropic announced—and why it is important

Anthropic’s policy is direct: no sponsored chat links, no hidden product placement, and no advertiser-driven response logic inside Claude.1 That is a concrete product decision, not just a values statement. It gives users a clearer expectation before they start AI conversations about work, money, health, or family planning.

The company also explains its revenue model: paid subscriptions and enterprise contracts. We should read this as a governance choice. If revenue comes from subscribers and business customers, the product team can focus on whether AI conversations are useful, accurate, and respectful, rather than whether people clicked an ad.1

How this connects to Claude’s values

Anthropic links its ad-free stance to Claude’s constitution, where “genuine helpfulness” sits beside safety, ethics, and policy compliance as a core aim.2 This matters because product rules and training rules need to point in the same direction. If they conflict, user experience becomes inconsistent.

From a practical view, we can treat this as alignment between business incentives and user outcomes. For everyday users, that means AI conversations are less likely to carry hidden pressure toward purchases unless the user explicitly asks to shop or compare options.

Trust and safety when AI conversations get personal

Anthropic’s post highlights a key point: people often share sensitive information in assistant chats, sometimes in ways they would not in a search bar. That is why trust design matters. When people feel emotionally exposed, even subtle commercial steering can feel intrusive.

Outside Anthropic, research supports a careful approach. A 2025 mixed-methods analysis in the Journal of Medical Internet Research warns that commercial pressure can conflict with responsible mental health support in conversational systems.3 We should not read this as “AI is always bad,” but as a call for strong boundaries and clear purpose.

Stanford HAI also reported that therapy-style chatbots can show harmful patterns, including unsafe or stigmatizing behavior in sensitive contexts.4 So when AI conversations move into emotional territory, our standard should be higher, not lower. Safety, transparency, and user control need to come first.

Access without ads: the hard part

A fair question is whether an ad-free model can still widen access. Anthropic says yes, and points to education programs, including a Teach For All initiative reaching educators across 63 countries. This suggests the company is trying to expand use through partnerships and training instead of chat-based advertising.

We should be realistic: no policy solves everything. But this direction gives users a clearer contract. In day-to-day terms, AI conversations can stay focused on the task at hand—writing, planning, learning, and problem solving—without an extra commercial layer inside the answer itself. 1,5

Practical checklist for stronger AI conversations

When we use assistants for real work, we can make our own habits stronger:

1) Set intent before starting AI conversations

Write one sentence about what outcome we want (for example: “compare two options with pros and cons”). This keeps the chat grounded.

2) Separate facts from advice

Ask the model to label verified facts, assumptions, and suggestions in different bullets. That makes review easier.

3) Ask for sources on high-stakes topics

For finance, health, legal, or parenting decisions, require citations and then verify them ourselves.

4) Keep personal details minimal

Share only what is needed for the task. Shorter personal context lowers privacy risk.

5) Use AI conversations as a draft partner, not a final authority

For important decisions, we should always add human judgment, domain experts, or official guidance.

Final perspective

The core message from Anthropic is straightforward: if we want AI to feel like a reliable workspace, we need incentives that protect user trust. An ad-free stance does not guarantee perfect output, but it removes one major conflict of interest at the point where many people now think, plan, and decide.

As AI conversations become part of everyday life, the standard should be simple: clear intent, clear incentives, and clear accountability. That is how we keep these tools useful for real people in real situations.

Citations

- Anthropic. “Claude Is a Space to Think.” Anthropic, 4 Feb. 2026.

- Anthropic. “Claude’s New Constitution.” Anthropic, 22 Jan. 2026.

- Moylan, Kayley, and Kevin Doherty. “Conversational AI, Commercial Pressures, and Mental Health Support: A Mixed Methods Expert and Interdisciplinary Analysis.” Journal of Medical Internet Research, 25 Apr. 2025.

- Wells, Sarah. “Exploring the Dangers of AI in Mental Health Care.” Stanford HAI, Stanford University, 11 June 2025.

- Anthropic. “Anthropic and Teach For All Launch Global AI Training Initiative for Educators.” Anthropic, 21 Jan. 2026.